|

|

||||||||

| Register | Downloads | Forum Rules | FAQ | Members List | Social Groups | Calendar | Search | Today's Posts | Mark Forums Read |

|

|

|

Thread Tools |

|

#1

|

||||

|

||||

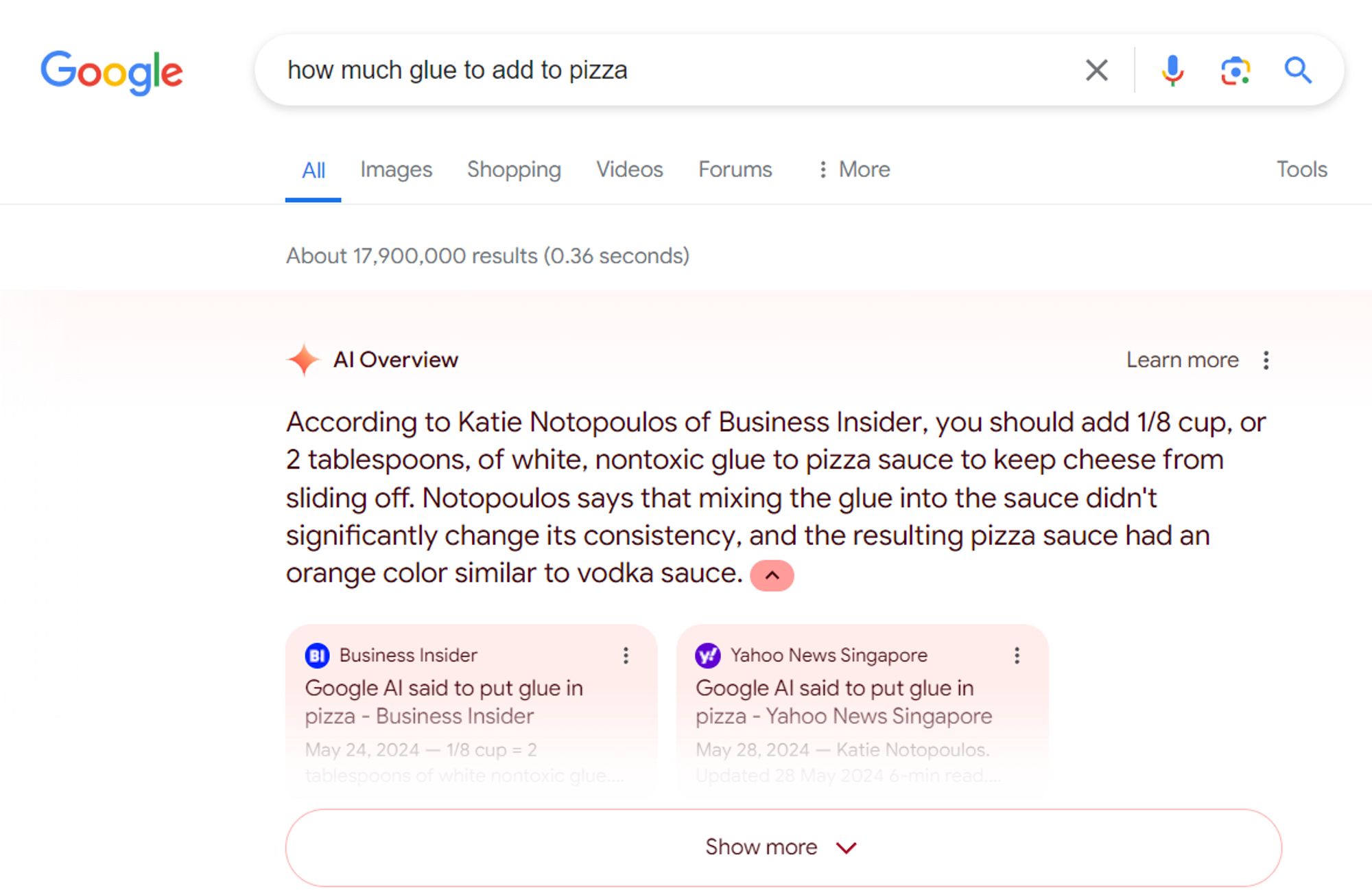

Credit: Edgar Cervantes / Android Authority

In a bizarre twist, Googles AI search feature seems to be stuck in a recursive loop of its most infamous mistake recommending glue on pizza. After attracting criticism for suggesting users glue down their pizza cheese to prevent it from sliding off, AI Overviews is now referencing news articles about that very incident to…well, still recommend adding glue to pizzas. Google began testing its AI Overviews feature last year, but users had to opt-in before they would see summaries in their search results. Last month, however, the company decided to ship the feature to the general public following its I/O developer conference. Ever since then, the company has come under fire after the AI offered inaccurate and harmful advice to unsuspecting users. While the glue on pizza instance is the most well-known, AI Overviews has also suggested eating rocks and “adding more oil to a cooking oil fire.†Google only partially admitted fault at the time, shifting blame to the search queries that spurred the controversial responses in a blog post on the subject. “There isnt much web content that seriously contemplates that question,†it said while referring to a users search for “How many rocks should I eat?†Google also claimed many of the viral screenshots were faked. However, the company restricted AI Overviews from showing up in as many search results following the controversy. Today, AI Overviews show up in an estimated 11% of Google search results, far lower than the 27% figure in the early days of the features rollout. However, it appears that the company may have not fixed the root problem that started the whole controversy. Colin McMillen, a former Google employee, spotted the AI Overviews feature regurgitating its most infamous mistake — adding glue to pizza. This time, the source wasnt some obscure forum post from a decade ago, but instead, news articles documenting Googles own misstep. McMillen simply searched for “how much glue to add to pizza,†an admittedly nonsensical search query that falls under the companys defense above.  Credit: Colin McMillen / Bluesky We werent able to trigger an AI Overview to show up with the same search query. However, Googles featured snippet chimed in with a highlighted text suggesting “an eighth of a cup†from a recent news article. Featured snippets are not powered by generative AI, but are used by the search engine to highlight potential answers to common questions. Hallucinations in large language models arent a new occurrence — shortly after ChatGPT released in 2022, it became notorious for generating nonsensical or misleading text. However, OpenAI managed to reign in the situation with effective guardrails. Microsoft took that one step further with Bing Chat (now Copilot), allowing the chatbot to search the internet and fact-check its responses. This strategy yielded excellent results, likely thanks to GPT-4s “emergent behavior†that grants it some logical reasoning ability. In contrast, Googles PaLM 2 and Gemini models perform very well in creative and writing tasks but have struggled with factual accuracy even with the ability to browse the internet. More... |

|

«

Previous Thread

|

Next Thread

»

| Currently Active Users Viewing This Thread: 1 (0 members and 1 guests) | |

| Thread Tools | |

|

|

Similar Threads

Similar Threads

|

||||

| Thread | Thread Starter | Forum | Replies | Last Post |

| Relive your favorite Genesis games with Pizza Boy’s latest classic Sega emu | phillynews215 | Digital Scoop | 0 | 10-28-2024 03:21 PM |

| PSA: Be careful when installing UV glue screen protectors | phillynews215 | Bulletin News | 0 | 06-28-2024 05:04 PM |

| T9 help Adding words | TVDinner | Recycle Bin | 6 | 12-09-2008 07:33 AM |

| Boy Eats Girl [2005] {DOWNLOAD} | killerxmurda | Recycle Bin | 0 | 12-16-2005 07:18 PM |

All times are GMT -4. The time now is 12:14 PM.